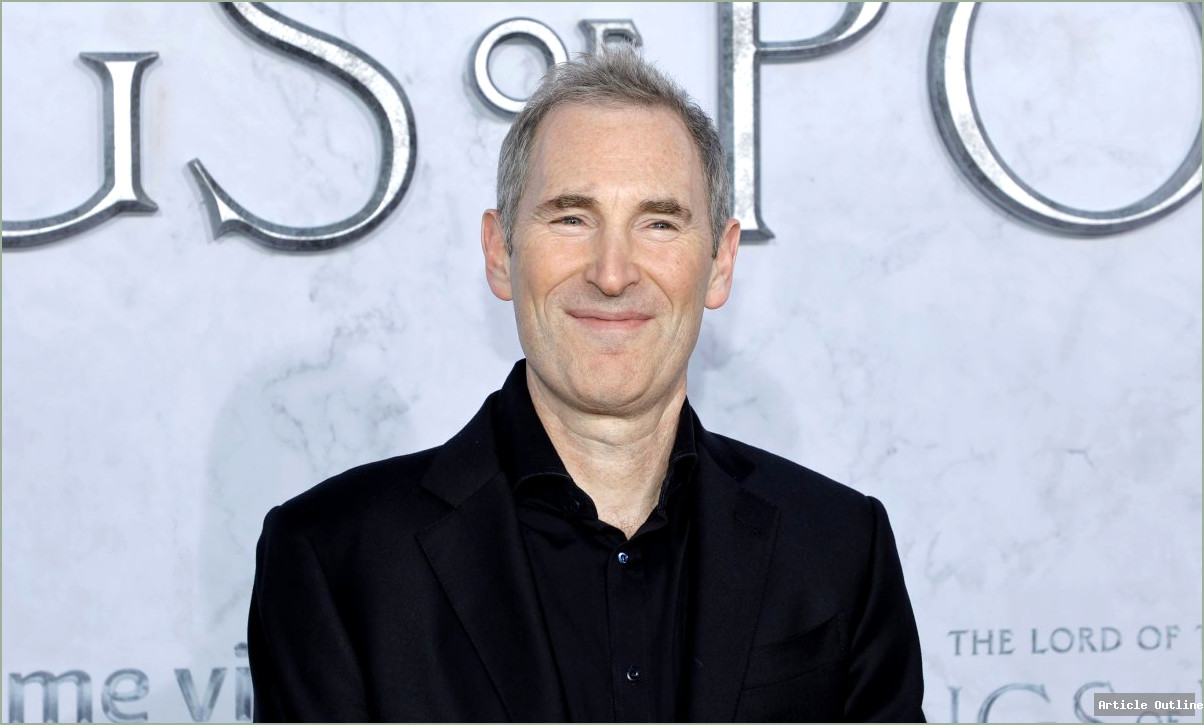

Forget the headlines about Nvidia’s AI dominance—Amazon is quietly building an empire with its homegrown Trainium chips, and the numbers are finally loud enough for everyone to hear. Andy Jassy just revealed that AWS’s Nvidia competitor is now a multibillion-dollar business, with over 1 million chips in production and 100,000+ companies relying on it. Let’s unpack why this matters and why the real battle for AI supremacy is happening deep inside the cloud.

Why This Matters

- AI’s hardware bottleneck: Startups and enterprises alike are desperate for computing power to train and run AI models. Nvidia’s GPUs are so in-demand that many companies face long wait times, sky-high costs, or outright supply shortages.

- AWS is now a credible alternative: By building its own chips, Amazon is no longer just a cloud provider—it’s a direct competitor to Nvidia, offering price and performance advantages within its massive cloud infrastructure.

- The stakes are enormous: The AI chip market is projected to exceed $400 billion by 2027. Even if Amazon only captures a slice, it’s a game-changer for both the company and the industry.

What Most People Miss

- Trainium’s revenue isn’t just hype: Amazon’s numbers are backed by real deployments, most notably with Anthropic, a leading AI company using over 500,000 Trainium2 chips for Project Rainier. That’s not just testing—it’s critical infrastructure for next-gen AI models like Claude.

- Strategic cloud lock-in: Amazon’s investment in Anthropic isn’t just financial. By making AWS Anthropic’s primary model training partner, Amazon is tying major AI development directly to its platform—a playbook reminiscent of Microsoft’s tight integration with OpenAI.

- Challenging CUDA’s grip: Nvidia’s dominance isn’t just about chips—it’s about software. Most AI applications are built for Nvidia’s CUDA ecosystem, making switching painful. Amazon’s roadmap (with Trainium4 designed to work alongside Nvidia GPUs) signals a long-term strategy to chip away at this advantage.

Key Takeaways

- AWS Trainium is a multibillion-dollar business with real traction—over 1 million chips deployed and 100,000+ customers.

- Amazon’s focus on price-performance is classic AWS: undercut the competition while delivering scalable tech.

- Amazon is leveraging its cloud dominance and investments (like Anthropic) to bootstrap its silicon business, much as it did with other AWS services.

- True Nvidia disruption is hard: CUDA’s ecosystem and Nvidia’s networking tech (like Mellanox’s InfiniBand) are major barriers. But Amazon’s interoperability plans show it’s playing the long game.

Industry Context & Comparisons

- Only a handful of companies (Google, Microsoft, Amazon, Meta) have the engineering muscle to design their own AI chips and the cloud scale to deliver them.

- Historically, we saw similar wars: Intel vs. SPARC, and now Nvidia’s CUDA vs. everyone else. The lesson? Software ecosystems make or break hardware platforms.

- The partnership webs are tangled: Anthropic is tied to AWS but also runs on Microsoft’s Azure (with Nvidia chips), and even OpenAI is now using AWS for some workloads.

Pros and Cons of AWS’s Trainium Strategy

- Pros:

- Lower costs and higher performance for AI workloads on AWS

- Reduced dependence on Nvidia’s supply chain and pricing

- Tighter integration with AI startups (like Anthropic) and enterprise users

- Cons:

- Breaking CUDA’s grip is a massive software challenge

- True cross-cloud compatibility for customers is still a work in progress

- Market perception still sees Nvidia as the “default” for cutting-edge AI

The Bottom Line

Amazon’s Trainium isn’t just a chip—it’s a strategic lever in the battle for the future of AI infrastructure. The real win is not (yet) dethroning Nvidia, but making AWS the indispensable home for the next wave of AI innovation. As Trainium3 and beyond roll out, expect the competition to get even hotter—and don’t be surprised if Amazon’s quiet chip hustle turns into the industry’s loudest victory lap.