Amazon Web Services (AWS) is making a bold play in the artificial intelligence (AI) arms race by launching powerful new features in Amazon Bedrock and SageMaker AI. These upgrades aim to make custom large language model (LLM) creation accessible and more practical for enterprise customers, potentially shifting the competitive landscape in cloud-based AI. But why does this matter—and what are the real implications for businesses and the wider industry?

Why This Matters

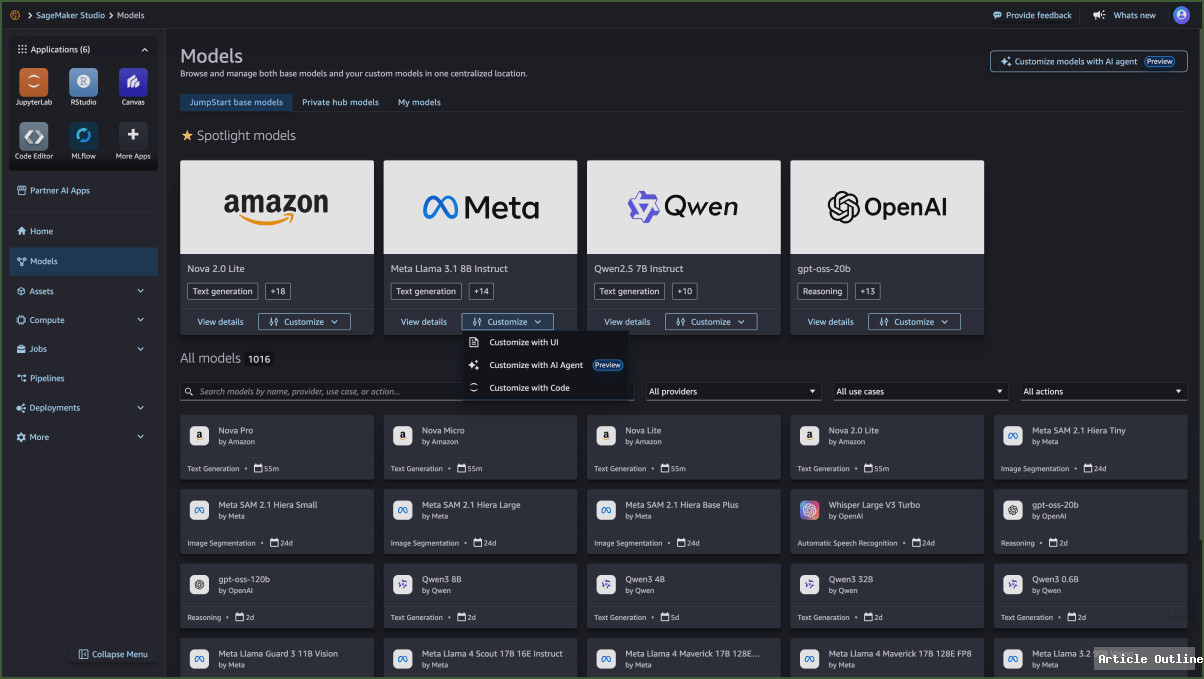

- Democratizing Custom AI: AWS is lowering the technical barriers for enterprises to build and fine-tune their own LLMs. Serverless customization in SageMaker means companies no longer need deep machine learning expertise or massive infrastructure to get started.

- Enterprise Differentiation: As more businesses adopt AI, the question becomes: “How do I stand out if everyone has access to the same models?” AWS’s push for custom models directly addresses this, letting companies tune models to their unique needs, brand voice, and proprietary data.

- Competitive Pressure: AWS is a latecomer in LLM popularity—recent surveys show most enterprises prefer Anthropic, OpenAI, or Gemini. This move is a strategic effort to regain ground and offer something competitors don’t: seamless, scalable customization.

What Most People Miss

- Serverless = Simpler, Faster Iteration: The new serverless approach in SageMaker isn’t just about saving on IT resources. It accelerates the experimentation cycle, allowing non-experts to test ideas and deploy solutions rapidly.

- Agent-Led Experiences: The introduction of natural language-driven, agent-led customization (even if just in preview) signals a future where building powerful AI models could be as intuitive as chatting with a digital assistant.

- Reinforcement Fine-Tuning in Bedrock: By automating reward-based fine-tuning, AWS is opening the door to more specialized, behavior-driven AI applications—think medical, legal, or industry-specific assistants that learn from feedback loops.

Key Takeaways

- Custom LLMs are the new battleground. Enterprises want models that reflect their brand, values, and data. AWS is betting that easy customization is the key to unlocking this next wave.

- Cost is still a factor: Nova Forge, AWS’s white-glove custom model service, starts at $100,000/year. While serverless tools democratize access, high-end customization remains a premium offering—for now.

- Ecosystem effects: By supporting both their own Nova models and open-source options like Meta’s Llama and DeepSeek, AWS is positioning itself as a flexible platform, not a closed garden.

Actionable Insights & Industry Context

- For Developers: Experiment with agent-led SageMaker to rapidly build prototypes—even if you’re not an AI PhD.

- For Enterprises: Consider how proprietary LLMs trained on your internal data can create competitive moats—especially as generative AI moves from hype to real business value.

- For the Industry: Expect a surge in AI-powered vertical applications, as customization becomes more accessible and affordable.

The Bottom Line

AWS’s new custom LLM features signal a maturity shift in enterprise AI: it’s no longer just about access to cutting-edge models, but how uniquely tailored those models can be to your business. While AWS has lagged behind in AI model popularity, these tools could give it a powerful differentiator—if they deliver on their promise of simplicity, flexibility, and scalability. For businesses, the message is clear: customization is the next frontier in AI advantage.