Unlock the Full Potential of Machine Learning APIs with LitServe

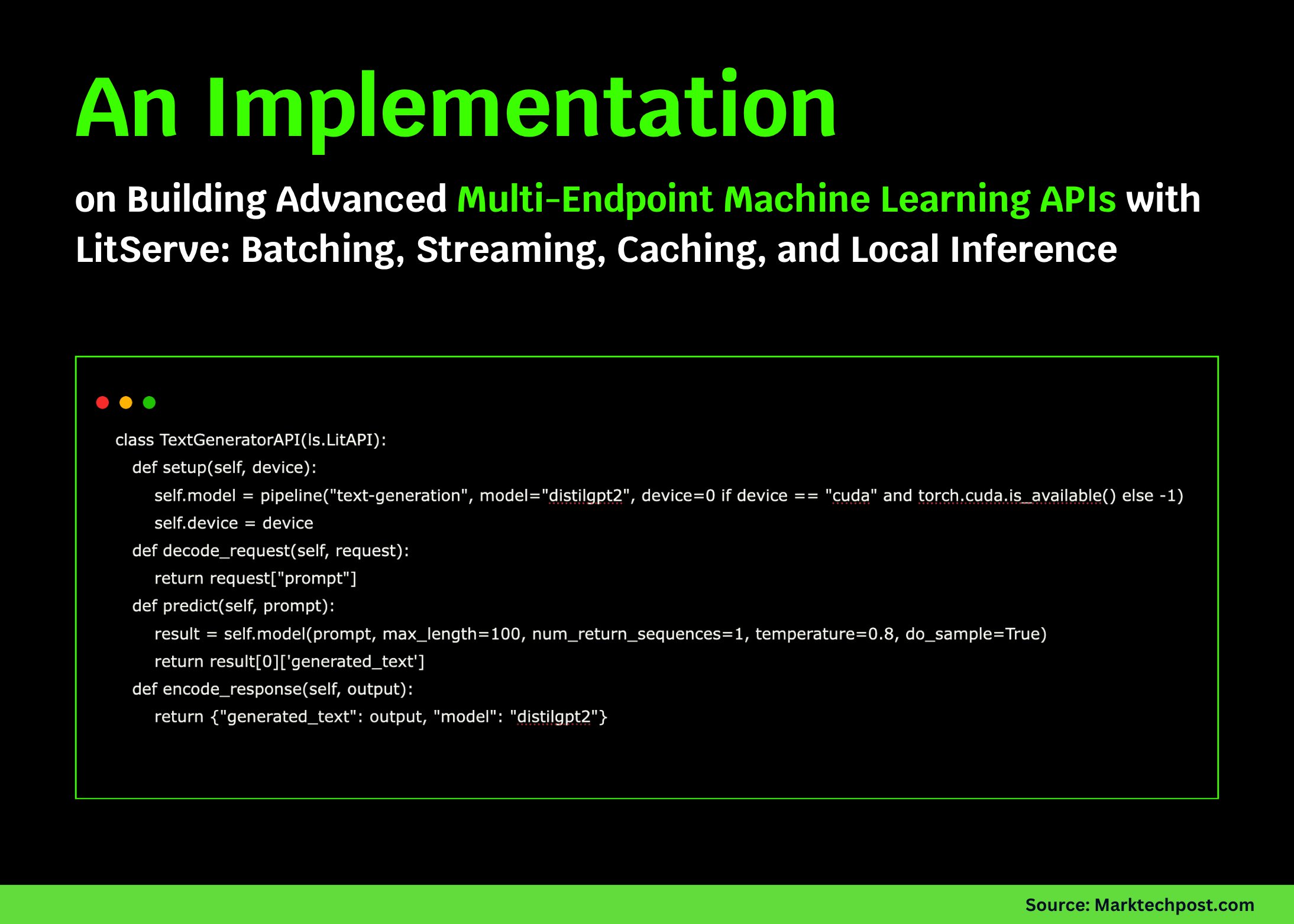

LitServe has emerged as a revolutionary tool for developers looking to create advanced multi-endpoint machine learning APIs. By providing seamless support for batching, streaming, caching, and local inference, LitServe makes it easier than ever to deploy scalable and efficient ML solutions.

Key Features: Batching, Streaming, Caching, and Local Inference

With LitServe, you can manage multiple endpoints from a single API deployment. This flexibility lets you serve various machine learning models efficiently, catering to diverse application needs. Batching helps you handle high volumes of requests by grouping them for faster processing. Streaming enables real-time data handling, perfect for applications demanding instant responses. Caching reduces repeated computations, thus boosting overall API performance. Local inference brings computation closer to the data source, minimizing latency and enhancing user experience.

Developers can now build, deploy, and scale machine learning APIs effortlessly, thanks to LitServe’s robust architecture. If you want to stay ahead in the fast-evolving AI landscape, integrating LitServe into your tech stack will give your applications a significant edge.