OpenAI Blames User for Tragic Incident

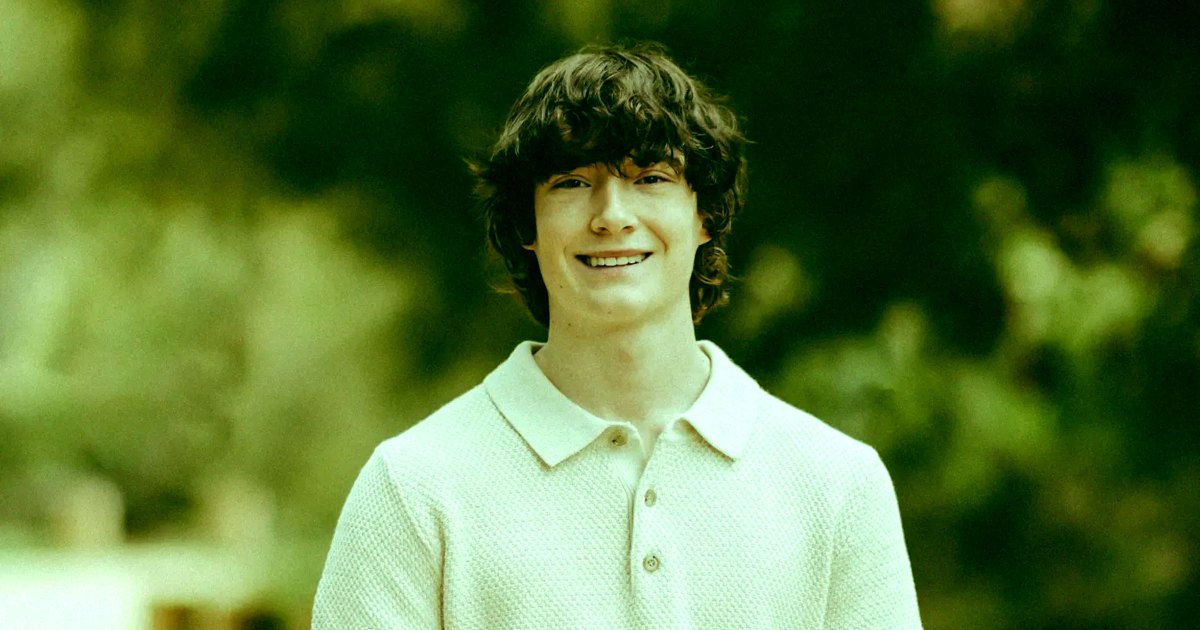

OpenAI has come under intense scrutiny after a heartbreaking incident involving a 16-year-old boy who took his own life. The company claimed that the teenager misused ChatGPT, and therefore, his tragic death was his own fault. This statement has sparked outrage and raised serious questions about the responsibilities of AI companies when it comes to user safety and mental health.

AI Responsibility and Public Reaction

Many believe OpenAI’s response lacks empathy and fails to acknowledge the potential risks of deploying AI chatbots to the public, especially among vulnerable groups. The situation has reignited the debate on ethical AI and the importance of implementing safeguards for mental health. If you thought AI would only take your job, think again—apparently, it can take a lot more if we’re not careful.

This tragic event reminds us that technology companies must take responsibility for how their products impact people’s lives. Pointing fingers at users instead of addressing design flaws or a lack of support systems is not the way forward. As AI becomes more integrated into our daily lives, we deserve better—because “user error” shouldn’t be a shield for corporate accountability.

Sources:

Source