Understanding the Limitations of Vision-Language Models

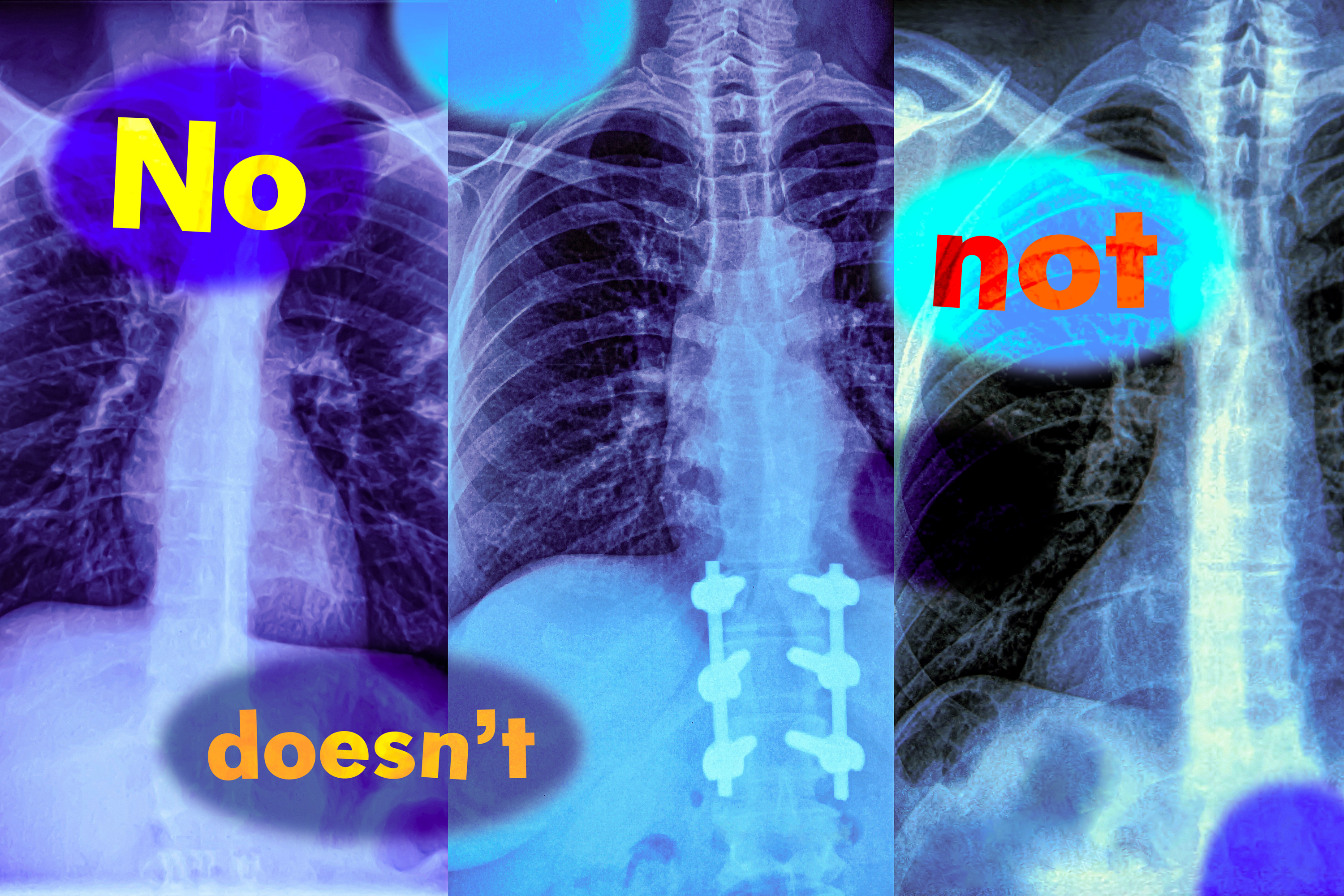

Recent research from MIT highlights a critical limitation in vision-language models. These models, which are increasingly used for analyzing medical images, struggle to comprehend negation words such as “no” and “not”. This shortcoming can lead to unexpected failures when the models are tasked with retrieving images that contain certain objects while excluding others.

This discovery raises significant concerns about the reliability of these models in critical fields like healthcare. If a vision-language model misinterprets a query due to its inability to process negation, it could result in incorrect or incomplete medical analysis. As reliance on artificial intelligence grows, ensuring these systems can accurately understand and process all aspects of language is crucial.